Project Insights: How rendersnek Produced a Documentary-style ad with AI

Discover how rendersnek created a full FOOH concept using AI — from environments and characters to narration — and what studios can learn from it.

A guest article by rendersnek

Every FOOH or CGI studio has faced this problem at least once: a client walks in with an idea so big, so technically ambitious, it almost borders on a Hollywood production.

Last year, Kockum Sonics — a Swiss company specializing in acoustic alarm systems — came with an idea that, at that time, seemed too ambitious for a classic production.

This year, the situation had changed. With a wave of new AI tools now on the market, shots that once felt “impossible” suddenly became achievable on a fraction of the budget. So we revisited the concept — not just to fulfill the brief, but to use it as a testbed for today’s state-of-the-art AI pipeline.

We turned their vision into a full experiment: could we build an entire mini-documentary with AI, from environments and characters to narration and disaster scenes? And at the very end, we surprised the client with the result.

We knew from the very beginning that this wasn’t a task for a classic FOOH production, which we usually get hired for. Yet we still wanted to take it on. So it became an open-ended experiment: could an entire visual concept be produced exclusively with AI?

What started as a question mark turned into a fully realized 30 seconds AI — complete with environments, characters, disaster scenes, and an authentic narrator carrying the story. And this is how we made it happen.

Phase 1: Research & Reference Sourcing (Foundations for Image Creation)

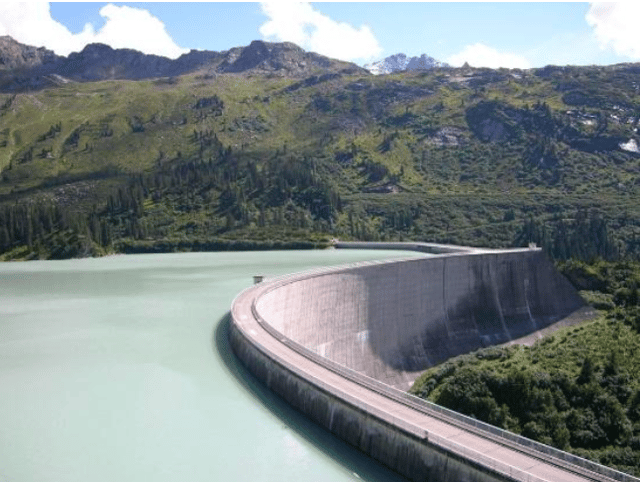

First, we started with reference hunting: reservoirs, dam architecture, alpine valleys. The Kaprun dam in Austria served as a particularly strong anchor for scale and structure. From there, ChatGPT acted as our image co-director. First, we started with reference hunting: reservoirs, dam architecture, alpine valleys. The Kaprun dam in Austria served as a particularly strong anchor for scale and structure.

From there, ChatGPT stepped in as our image co-director. We didn’t throw full scene descriptions at it right away. Instead, we worked in tight iterations: one visual cue at a time, often pausing to refine before adding the next detail — whether it was optics, location, or atmosphere.

Rather than scripting everything frame by frame, ChatGPT helped us translate these references directly into image prompts. That process became the driver for generating the first usable base images.

Phase 2: Base Image Creation — Characters and Environments

Two pillars drove this phase:

a documentary-style narrator (calm, intelligent, outdoors) and

the environmental base images (dam wall, reservoir, valley, forest lines, below-dam perspectives).

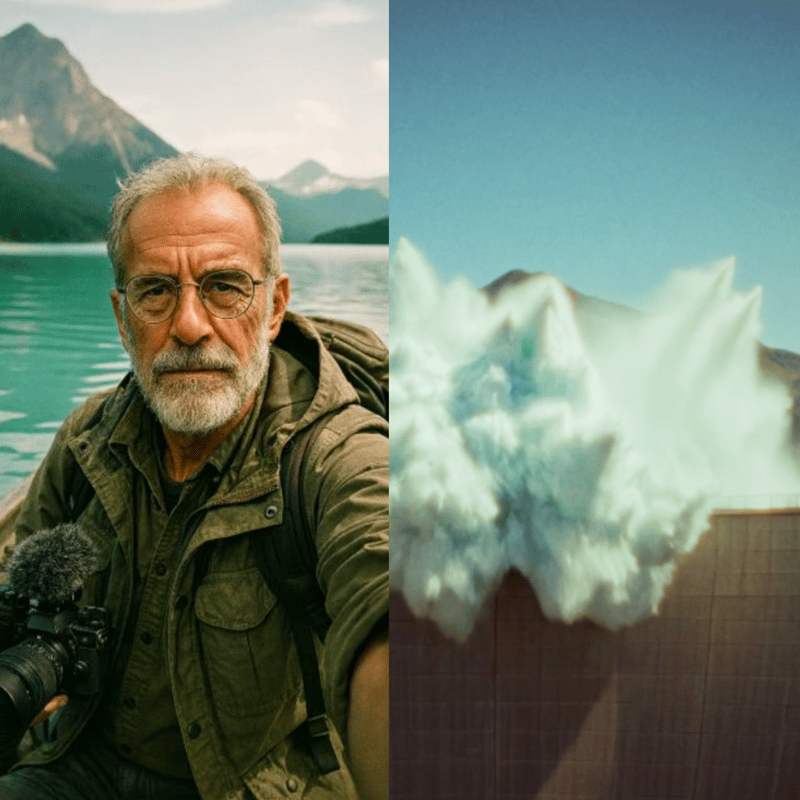

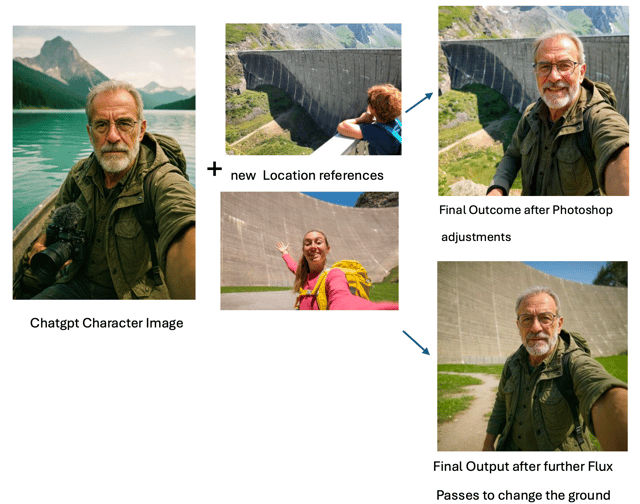

For the character, the challenge was to design someone who felt authentic, a figure that could convincingly carry a documentary voice across multiple scenes, while remaining visually consistent. We began with real-world references: older men with glasses, light beards, and outdoor clothing.

From there, we built the persona through an iterative prompt dialogue with ChatGPT. By moving in small steps — pausing for “wait for further instructions,” then layering new cues like location or camera optics — we shaped a photoreal narrator who never actually existed, yet instantly projected credibility and familiarity.

Prompt fragments used along the way (examples):

“Classic documentary speaker, older male 60–70, thin-frame glasses, light beard, explorer’s outfit.”

“Hyper-photorealistic, analog film look — Kodak Portra 400, Zeiss 50 mm, Minolta XD1, slight grain, 3:4 portrait.”

“He’s filming himself in selfie perspective on a rowboat in the reservoir, looking into the camera; place some outdoor/camera gear in the boat.”

The environments required a different kind of discipline: they had to remain geographically stable so that later video sequences would feel coherent. The Kaprun dam in Austria became a key anchor for scale and structure. Using this and other references, ChatGPT generated a first library of base images — from the dam wall and the reservoir to valley perspectives below. Photoshop’s Generative AI then refined and extended these outputs: correcting flaws, expanding frames into cinematic formats, and inserting small, identity-defining details such as huts or tree lines to ensure each setting looked authentic and connected.

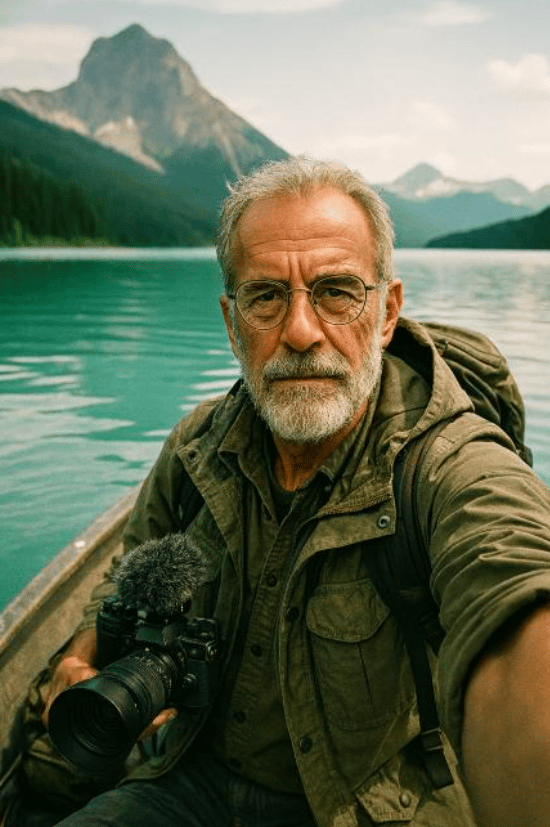

Phase 3: Consistency with the Flux Context Model

To broaden and stabilize the narrator’s look across settings, we used the Flux context model to generate additional vlog-style stills anchored to the base portrait. When outputs drifted (features, clothing, micro-style), we fixed them either in Photoshop or by re-running selected Fluxpasses until the character kit felt lock-solid.

Phase 4: Speaking Vlogs with Higgsfield (Google Veo 3, “Speak”)

With character stills ready, we moved to Higgsfield, which leverages Google Veo 3 and a built-in „Speak“ tool for speaking characters (lip sync, facial micro-movement, and audio).

What worked well on Higgsfield in practice:

- Consistency of voice identity even across different base images of the same character.

- Tight coupling of audio and generated movement within one workflow (fewer handoffs).

- Fast iteration for talking-head content — ideal for the vlog segments that “hold the viewer’s hand” through the story.

Example prompt used: “This documentary vlogger stands in front of a dam wall amidst serene nature and talks to his viewers with a calm and curious voice. He sounds like an old documentary speaker.”

Across takes and settings, the character retained the same vocal personality and visual coherence.

Phase 5: Disaster Cinematography with Kling 2.1 Master

For cinematic action and nature, we tested Veo 2, Veo 3, and others. Within Freepik’s AI suite, the clear winner for the complex disaster sequences was Kling 2.1 Master.It was the only model that, with enough iteration, could credibly render:

- a landslide cascading into a mountain lake (0:16),

- the ensuing flood wave threatening the valley (0:23), and

- The siren emitting shockwave-like pulses that meet and “neutralize” the wave (the film’s literal resolution) (0:30).

These prompts were highly specific and non-generic; most models struggled to parse them.

Kling wasn’t magical out-of-the-box, but it gave us usable building blocks after persistent experimentation.

To support the prompting process, we used the Freepik Prompt Enhancer, which helped articulate visual instructions more precisely — especially when the right language for a niche effect was hard to pin down.

For simpler sequences, Kling performed flawlessly with minimal effort — which is where observations like “realistic movement, smooth camera transitions, high prompt adherence, cost-effective” came from in the first place:

- establishing shots of the dam,

- wide aerial perspectives,

- smooth pans and camera behavior,

- convincing natural lighting.

Those were the places where it just worked — quickly and cleanly.

Prompt to produce the aerial shot:

“Camera slowly ascends, tilting downwards as the view transitions to a bird’s-eye perspective over the serene lake. The scene showcases the dam and surrounding mountains in vibrant detail, highlighting the natural beauty of the landscape.“

Phase 6: Voice Cloning with ElevenLabs

We wanted the same voice from the Higgsfield vlogs for a longer, scene-spanning voiceover. We started training a voice clone on Elvenlabs using the few Higgsfield clips available (not optimal by any means). Ideally, you’d train across the full range of a voice — different emotions, pacing, intensity, environments and so on.

Even so, the clone was surprisingly good given the limited training data: fairly consistent timbre, most of the time recognizably the same narrator, and good enough to carry the film’s voiceover with a few minor manual tweaks here and there. With a broader dataset, we’d expect far greater nuance, dynamic range, and realism.

Phase 7: Putting the clone to work and figuring out narrational emotion

It was now time to actually produce the voiceover for the film using the newly created voice clone. ChaGPT quickly came up with a text for the speaker, which after some human tweaks by our creative team was good to go for the voiceover.

Because early generations felt rather neutral we dug into ElevenLabs options and scripting tactics, researching what could be done. ElevenLabs officially recommends placing some kind of narrative direction after the spoken line in the script:

e.g., “Stationary sirens,” he said slowly and seriously.

Through testing, however, we discovered the opposite worked better: placing the emotional cue before the spoken line. For example:

Slowly and with gravity, he explains: “Stationary sirens.”

This method ensured that by the time the line was spoken, the AI voice was already “in Character.” The general downside of narrational cues: these leading cues have to be manually cut out afterward. The upside: the delivery is much much more convincing.

Other key techniques included:

- Using <break time=“1.0s” /> markers for precise pauses instead of relying on punctuation.

- Adjusting the Stability setting to balance between expressive variation and consistent tone

The combination of these approaches transformed the voiceover from robotic to narratively engaging.

Phase 8: Final Assembly & Sound

The final pass brought together:

- Vlog clips (Higgsfield / Veo 3 Speak),

- Disaster and landscape sequences (Freepik Kling 2.1 Master),

- Base images & environmental visuals generated primarily with ChatGPT (then refined via Photoshop’s Generative AI and, for continuity, the Flux context model)

- Voiceover (ElevenLabs clone with scripted narration),

- Sound design, editing, and timing (Manual polish with Davinci Resolve, also includes an automatic subtitling tool, which we used as a baseline for timing).

The finished piece is a cohesive, fully AI-generated short — human-directed. Machine-executed and manually cut and finished.

Reflections & Learnings

Looking back, this project showed us both the strengths and the limits of today’s AI pipeline. It’s worth noting that we conducted the experiment in July 2025 — and in the short time since then, several of the tools we used have already advanced significantly.

On the technical side, our key learnings are:

- Complex, non-generic prompts are still hard

- Simple, generic scenarios can be generated convincingly, with stunning visuals and strong prompt adherence.

- ChatGPT is real workhorse for image creation, translating references into usable base images and guiding an iterative workflow.

- Photoshop’s Generative AI is the glue, fixing, extending, and aligning shots into something coherent.

- Higgsfield (Veo 3 with its “Speak” feature) was surprisingly reliable for talking characters, keeping voice and identity consistent.

- Voice cloning proved viable even with minimal data, although more material would clearly unlock far greater nuance.

- Natural-sounding narration was possible with the right mix of emotional cues, timing adjustments, and manual editing.

- Above all, no single model covered everything — success came from tool-stacking and strong reference-driven direction.

But the bigger realization was more creative than technical. AI was never the creative director — it was the assistant. The visuals only worked because they were steered by research, preparation, and a clear creative vision. Every frame still required planning, experimentation, and decision-making.

So yes, AI can accelerate production and make big, “Hollywood-scale” concepts suddenly feel possible. But it also tempts you into a rabbit hole of perfectionism — chasing endlessly for the flawless shot that may never come. The discipline lies in knowing when to let go.

The real value of AI, we realized, lies in how it amplifies human direction. It makes things possible that once demanded enormous planning on the production side and vast resources in postproduction. Some shots wouldn’t have been feasible at all within most budgets.

In that sense, AI works like a shortcut — stripping away layers of logistics and enabling scenarios that feel truly unique. But the trade-off is that it requires a crystal-clear vision: what the outcome should look like, how it should feel, and how it should draw people in emotionally.

And while AI can save time, these productions are far from one-click wonders. Every sequence still takes countless iterations, cuts, and careful arrangement before it becomes convincing. The tools accelerate the work, but the craft remains in how you steer, refine, and assemble them.

That’s also what made sharing the result with the client so rewarding. After all the experiments, tests, and re-cuts, we were curious how they would respond.

And what did they say?

They were genuinely pleased that we revisited their idea and pushed to make their vision real in a new way. In the end, they not only embraced the outcome but also paid for the result — which meant the project covered our research costs while giving them a fresh, unexpected piece of storytelling.